When I launched SQL 2017 configuration manager, I got the error below.

To fix the issue, I ran the following command in command prompt on the server.

mofcomp "%programfiles(x86)%\Microsoft SQL Server\140\Shared\sqlmgmproviderxpsp2up.mof"

I was able to open SQL server configuration manager.

Thank you for reading my blog!

Good Bye 2018...

Hello 2019..............

Thank you for visiting my blog. My site is intended to share information and knowledge with others as well as a reference site for me.

Friday, December 28, 2018

Creating Alias for SQL server

To Create an alias for SQL instance

Open SQL Server Configuration Manager

Go to both SQL Native Client branch on 32 and 64 bit. Please see screenshot below.

Right click on the right side of the window pane.

Alias Name : any name

Port No : 1433 (SQL default Port no)

Protocol : TCP/IP

Server : Fully qualified local server name(ex. server.domain.us.dom)

Next step is very important. We need to add the alias to DNS entry.

Please add the following SQL server alias to DNS entry alias/CName.

Alias Name : Newalias

Fully Qualified domain Name(FQDN)for target host : server.domain.us.dom

Fully Qualified domain Name(FQDN)for target host : server.domain.us.dom

After adding it to DNS entry, we will be able to connect to Alias using SSMS from our desktop or application.

Thursday, November 15, 2018

Evict and Destroy a Cluster

In powershell,

get-clusternode

#Please do the following steps first.

# Step 1. Pause and Drain Role on a secondary node

# Step 2. Stop Cluster service on the node to be evicted

#Now proceed to remove a secondary cluster Node

Remove-ClusterNode -Name ServerName

#Now on the last node, Remove the cluster and cleanup

Get-Cluster ClusterName | Remove-Cluster -Force -CleanupAD

get-clusternode

#Please do the following steps first.

# Step 1. Pause and Drain Role on a secondary node

# Step 2. Stop Cluster service on the node to be evicted

#Now proceed to remove a secondary cluster Node

Remove-ClusterNode -Name ServerName

#Now on the last node, Remove the cluster and cleanup

Get-Cluster ClusterName | Remove-Cluster -Force -CleanupAD

Friday, August 17, 2018

How long did t take to load One point five Billion rows using ETL data flow ?

1.5 + Billion rows was Loaded to a table using ETL data flow. It took about 15 hrs 9 mins and 59 seconds.

Both data source and Destination are tables

2000 rows / batch

Table load - fast load

A few warning

[SSIS.Pipeline] Information: The buffer manager detected that the system was low on virtual memory, but was unable to swap out any buffers. 5 buffers were considered and 5 were locked. Either not enough memory is available to the pipeline because not enough is installed, other processes are using it, or too many buffers are locked.

[SSIS.Pipeline] Warning: Warning: Could not open global shared memory to communicate with performance DLL; data flow performance counters are not available. To resolve, run this package as an administrator, or on the system's console.

Thursday, August 16, 2018

Rolling Cluster upgrade to Windows 2012R2 to Windows 2016 - (SQL Server availability group is a workload)

The cluster has two nodes and the workload is SQL server availability group.

Step 1

- Drain role on one of the nodes

- Evict the node from a cluster

- Upgrade OS to Windows 2016

- After upgrading successfully, add the node back to the cluster

SQL Listener

During the node 1 upgrade, SQL listener is online and application has no down time.

ClusterFunctionalLevel Verificaton

Open PowerShell , Run

PS C:\WINDOWS\system32> get-cluster | select clusterfunctionallevel

ClusterFunctionalLevel

----------------------

8

you will get ClusterFunctionalLevel 8 ( which is a mixed mode clustering )

Step 2

- Drain role on the second node

- Evict the node from a cluster

- Upgrade the node OS to Windows 2016

- After upgrading successfully, add the node back to the cluster

Step 3

- Run validate cluster

- Check ClusterFunctionalLevel again

Open PowerShell , Run

PS C:\WINDOWS\system32> get-cluster | select clusterfunctionallevel

ClusterFunctionalLevel

----------------------

8

you will get ClusterFunctionalLevel 8 ( which is a mixed mode clustering )

Final Step

PS C:\WINDOWS\system32> Update-ClusterFunctionalLevel

NOTE: after you run this command, you cannot rollback.

if it runs successfully, you will get

PS C:\WINDOWS\system32> get-cluster | select clusterfunctionallevel

ClusterFunctionalLevel

----------------------

9

Summary

- Windows Server 2016 makes upgrading clusters easy with rolling upgrades

- Control when your cluster upgrade is complete with the Cluster Functional Level

- Add and manage your Windows Server 2016 cluster from the up-level (2016) nodes

Wednesday, July 25, 2018

SQL Server 2016 - Query Store

I was taking a Plural sight introduction class. Here I am sharing what I learnt from the course.

ALTER DATABASE DBA SET QUERY_STORE CLEAR;GO

[qst].[query_text_id],

[qsq].[query_id],

[qsq].[object_id],

[qsq].[context_settings_id],

[qst].[query_sql_text],

[qsq].[initial_compile_start_time],

[qsq].[last_compile_start_time],

[qsq].[last_execution_time],

[qsq].[avg_compile_duration],

[qsq].[count_compiles],

[qsq].[avg_optimize_cpu_time],

[qsq].[avg_optimize_duration]

FROM [sys].[query_store_query] [qsq]

JOIN [sys].[query_store_query_text] [qst]

ON [qsq].[query_text_id] = [qst].[query_text_id];

GO

SELECT

[qsq].[query_id],

[qsp].[plan_id],

[qsq].[object_id],

[qsq].[initial_compile_start_time],

[qsq].[last_compile_start_time],

[rs].[first_execution_time],

[rs].[last_execution_time],

[rs].[avg_duration],

[rs].[avg_logical_io_reads],

TRY_CONVERT(XML, [qsp].[query_plan]),

[qsp].[query_plan],

[rs].[count_executions],

[qst].[query_sql_text]

FROM [sys].[query_store_query] [qsq]

JOIN [sys].[query_store_query_text] [qst]

ON [qsq].[query_text_id] = [qst].[query_text_id]

JOIN [sys].[query_store_plan] [qsp]

ON [qsq].[query_id] = [qsp].[query_id]

JOIN [sys].[query_store_runtime_stats] [rs]

ON [qsp].[plan_id] = [rs].[plan_id]

WHERE [qsp].[query_id] = 3

SELECT

[qsq].[query_id],

COUNT([qsp].[plan_id]) AS [PlanCount],

[qsq].[object_id],

MAX(DATEADD(MINUTE, -(DATEDIFF(MINUTE, GETDATE(), GETUTCDATE())),

[qsp].[last_execution_time])) AS [LocalLastExecutionTime],

MAX([qst].query_sql_text) AS [Query_Text]

FROM [sys].[query_store_query] [qsq]

JOIN [sys].[query_store_query_text] [qst]

ON [qsq].[query_text_id] = [qst].[query_text_id]

JOIN [sys].[query_store_plan] [qsp]

ON [qsq].[query_id] = [qsp].[query_id]

WHERE [qsp].[last_execution_time] > DATEADD(HOUR, -8, GETUTCDATE())

GROUP BY [qsq].[query_id], [qsq].[object_id]

HAVING COUNT([qsp].[plan_id]) > 1;

GO

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED;

GO

SELECT

[qs].[execution_count],

[qs].[last_execution_time],

[s].[text],

[qp].[query_plan],

[qs].[plan_handle]

FROM [sys].[dm_exec_query_stats] AS [qs]

CROSS APPLY [sys].[dm_exec_query_plan] ([qs].[plan_handle]) AS [qp]

CROSS APPLY [sys].[dm_exec_sql_text]([qs].[plan_handle]) AS [s]

JOIN [sys].[dm_exec_cached_plans] AS [cp]

ON [qs].[plan_handle] = [cp].[plan_handle]

WHERE [s].[text] LIKE '%CustomerTransactionInfo%';

GO

SET TRANSACTION ISOLATION LEVEL READ COMMITTED;

GO

SQL Server 2016 Query Store

- Enable on a per database level

- Data persisted in internal tables in the user databases

- Restored databases will have a query store database

Enabling Query Store with GUI

USE [master]

GO

ALTER DATABASE [DBA] SET QUERY_STORE = ON

GO

ALTER DATABASE [DBA] SET QUERY_STORE (OPERATION_MODE = READ_ONLY)

GO

USE [master];

GO

ALTER DATABASE [DBA]

SET QUERY_STORE = ON;

Go

ALTER DATABASE [DBA]

SET QUERY_STORE (

OPERATION_MODE = READ_WRITE,

CLEANUP_POLICY =

(STALE_QUERY_THRESHOLD_DAYS = 30),

DATA_FLUSH_INTERVAL_SECONDS = 900,

INTERVAL_LENGTH_MINUTES = 60,

MAX_STORAGE_SIZE_MB = 100,

QUERY_CAPTURE_MODE = ALL,

SIZE_BASED_CLEANUP_MODE = AUTO,

MAX_PLANS_PER_QUERY = 200);

GO

Query Store collection setting

- Operation Mode = Read_Write | Read Only

- Query_Caputre_Mode = ALL | AUTO | NONE

- Max Plans Per Query = 200 different plan for a given query (default)

- Max Storage Size MB = 2 GB (Start with)

- CleanUp_Policy = (Stale query threshold days) 30 days

- Sized based cleanup mode = Auto | OFF

USE [master];

GO

ALTER DATABASE [DBA] SET QUERY_STORE (

OPERATION_MODE = READ_WRITE,

/*

READ_WRITE = data collection

(default)

READ_ONLY = no data collection

*/

QUERY_CAPTURE_MODE = ALL,

/*

are ALL queries captured,

or only "relevant" ones?

ALL = every query executed

(default)

AUTO = infrequent & insignificant

NONE = nothing new added

*/

MAX_PLANS_PER_QUERY = 200);

/*

INT data type

*/

GO

NOTE : Changing query store setting in a database will flush all cached plan from the particular database.

- Operation Mode = Read_Write | Read Only

- Query_Caputre_Mode = ALL | AUTO | NONE

- Max Plans Per Query = 200 different plan for a given query (default)

- Max Storage Size MB = 2 GB (Start with)

- CleanUp_Policy = (Stale query threshold days) 30 days

- Sized based cleanup mode = Auto | OFF

NOTE : Changing query store setting in a database will flush all cached plan from the particular database.

Query Store GUI

Permission to use Query Store

- VIEW DATABASE STATE

- db_owner needed to force/unforced plans

- VIEW DATABASE STATE

- db_owner needed to force/unforced plans

What is Query Store capturing?

Runtime Stats Store(Performance data)

- Compile time

- last execution time

- Duration

- CPU

- logical reads

- Physical reads

- Write

- Compile time

- last execution time

- Duration

- CPU

- logical reads

- Physical reads

- Write

Plan Store

- Query text

- Query plan

- Query text

- Query plan

Script to check Query store Setting in DMV

SELECT

[actual_state_desc],

[readonly_reason],

[desired_state_desc],

[current_storage_size_mb],

[max_storage_size_mb],

[flush_interval_seconds],

[interval_length_minutes],

[stale_query_threshold_days],

[size_based_cleanup_mode_desc],

[query_capture_mode_desc],

[max_plans_per_query]

FROM [sys].[database_query_store_options];

GO

Remove everything from Query Store

--Truncating sys tables behind the scenesALTER DATABASE DBA SET QUERY_STORE CLEAR;GO

Query store System Views

- sys.query_store_query

- sys.query_store_query_text

- sys.query_store_plan

- sys.query_store_runtime_stats

Query Store system views script

Query compile and optimization information

SELECT[qst].[query_text_id],

[qsq].[query_id],

[qsq].[object_id],

[qsq].[context_settings_id],

[qst].[query_sql_text],

[qsq].[initial_compile_start_time],

[qsq].[last_compile_start_time],

[qsq].[last_execution_time],

[qsq].[avg_compile_duration],

[qsq].[count_compiles],

[qsq].[avg_optimize_cpu_time],

[qsq].[avg_optimize_duration]

FROM [sys].[query_store_query] [qsq]

JOIN [sys].[query_store_query_text] [qst]

ON [qsq].[query_text_id] = [qst].[query_text_id];

GO

Query plan and execution information

SELECT

[qsq].[query_id],

[qsp].[plan_id],

[qsq].[object_id],

[qsq].[initial_compile_start_time],

[qsq].[last_compile_start_time],

[rs].[first_execution_time],

[rs].[last_execution_time],

[rs].[avg_duration],

[rs].[avg_logical_io_reads],

TRY_CONVERT(XML, [qsp].[query_plan]),

[qsp].[query_plan],

[rs].[count_executions],

[qst].[query_sql_text]

FROM [sys].[query_store_query] [qsq]

JOIN [sys].[query_store_query_text] [qst]

ON [qsq].[query_text_id] = [qst].[query_text_id]

JOIN [sys].[query_store_plan] [qsp]

ON [qsq].[query_id] = [qsp].[query_id]

JOIN [sys].[query_store_runtime_stats] [rs]

ON [qsp].[plan_id] = [rs].[plan_id]

WHERE [qsp].[query_id] = 3

Queries executed in the last 8 hours with multiple plans

SELECT

[qsq].[query_id],

COUNT([qsp].[plan_id]) AS [PlanCount],

[qsq].[object_id],

MAX(DATEADD(MINUTE, -(DATEDIFF(MINUTE, GETDATE(), GETUTCDATE())),

[qsp].[last_execution_time])) AS [LocalLastExecutionTime],

MAX([qst].query_sql_text) AS [Query_Text]

FROM [sys].[query_store_query] [qsq]

JOIN [sys].[query_store_query_text] [qst]

ON [qsq].[query_text_id] = [qst].[query_text_id]

JOIN [sys].[query_store_plan] [qsp]

ON [qsq].[query_id] = [qsp].[query_id]

WHERE [qsp].[last_execution_time] > DATEADD(HOUR, -8, GETUTCDATE())

GROUP BY [qsq].[query_id], [qsq].[object_id]

HAVING COUNT([qsp].[plan_id]) > 1;

GO

Confirm the plan that's being used now

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED;

GO

SELECT

[qs].[execution_count],

[qs].[last_execution_time],

[s].[text],

[qp].[query_plan],

[qs].[plan_handle]

FROM [sys].[dm_exec_query_stats] AS [qs]

CROSS APPLY [sys].[dm_exec_query_plan] ([qs].[plan_handle]) AS [qp]

CROSS APPLY [sys].[dm_exec_sql_text]([qs].[plan_handle]) AS [s]

JOIN [sys].[dm_exec_cached_plans] AS [cp]

ON [qs].[plan_handle] = [cp].[plan_handle]

WHERE [s].[text] LIKE '%CustomerTransactionInfo%';

GO

SET TRANSACTION ISOLATION LEVEL READ COMMITTED;

GO

Query Store for a stored proc

SELECT

[qsq].[query_id],

[qsp].[plan_id],

[qsq].[object_id],

[rs].[count_executions],

DATEADD(MINUTE, -(DATEDIFF(MINUTE, GETDATE(), GETUTCDATE())),

[qsp].[last_execution_time]) AS [LocalLastExecutionTime],

[qst].[query_sql_text],

ConvertedPlan = TRY_CONVERT(XML, [qsp].[query_plan])

FROM [sys].[query_store_query] [qsq]

JOIN [sys].[query_store_query_text] [qst]

ON [qsq].[query_text_id] = [qst].[query_text_id]

JOIN [sys].[query_store_plan] [qsp]

ON [qsq].[query_id] = [qsp].[query_id]

JOIN [sys].[query_store_runtime_stats] [rs]

ON [qsp].[plan_id] = [rs].[plan_id]

WHERE [qsq].[object_id] = OBJECT_ID(N'usp_GetMovie');

GO

Tuesday, July 10, 2018

Visual Studio 2017 Pro - SQL Schema Compare

SqlSchemaCompare

SQL Schema Compare from VS 2017 will compare all the objects from Source database to Target database.

Click on Tools > SQL Server > New Schema Comparism

Enter Source and Target Server and Database name

Click on Compare

After clicking compare button, you will see the objects which needs to be deleted, added , chanaged in Target Database.

You can update the Target my clicking Update button.

To generate the script, click on generate Script button

Sometimes, we need to compare indexes and tables between two databases. This is a useful tool for us.

Day 4- R Data Structure

Data Structures

Homogeneouse data structure

Atomic vector, Matrix, Array

Hetrogeneous data structure

List, Data Frame

Basic Classes of Objects

Atomic Classes

- Collection of data elements

- Grouped under one name

- Container

Homogeneouse data structure

Atomic vector, Matrix, Array

Hetrogeneous data structure

List, Data Frame

- Atomic vector

- 1 Dimension

- Matrix

- 2 Dimension

- Array

- n Dimension

- List

- 1 Dimension

- Data frame

- 2 Dimension

Basic Classes of Objects

Atomic Classes

Character

“A”, “c”

Numeric

4.36, 7.42

Integer

3,5

Logical

True, False

Complex

1 + 7i , 8 - 2i

Atomic Vector

- Commonly knows as vectors

- Homogeneous data structure

# Character

student.names <‐ c("Raj","Rahul","Priya","Poonam")

# Numeric

student.weights <‐ c( 60.5, 72.5 , 45.2, 47.5)

# Integer

student.physics.marks <‐ c( 70L , 75L , 80L, 85L)

# Logical

student.physics.interest <‐ c(FALSE, F, TRUE, T)

Common Operations on Atomic Vectors

- Arithmetic & logical Subsetting Coercion

Factor

- Special case of vector used to store nominal values

student.genders <‐ c(“Male",“Male",“Female",“Female")

student.genders <‐ factor(c(“Male",“Male",“Female",“Female"))

List

- Heterogeneous data structure

- Can contain items of different classes

- 1-Dimensional arrangement

Monday, June 11, 2018

Day 3 - R Variables and Operators

Variable

Name Convention

Name Convention

- Valid names: letters, numbers , dots or underline characters

- Do not user RESERVE

- Use lowercase letters

- Separate words using dot(.)

- Do not use underscores(_) or hyphens(-)

Custom Variable

Global Variable

Type of Vectorized Operation

Wednesday, June 6, 2018

R Programming Fundamental - Day 2 Help command

Help

Today, I am learning Help() and Vignette() commands.

help(command)

?command

help(rnorm)

?rnorm

help.search("rnorm")

??rnorm

Vignette

Getting package information in pdf format without internet

vignette()

vignette(package=.packages(all.available = TRUE))

vignette('parallel')

vignette('parallel', package='parallel')

Web Search

http://search.r-project.org

RSiteSearch("arithmetic mean")

To install a package

install.packages("sos")

To load that to library

library(sos)

Find function in R site

findFn("{arithmetic mean}")

findFn("{arithmetic mean}",maxPages=2)

Today, I am learning Help() and Vignette() commands.

help(command)

?command

help(rnorm)

?rnorm

help.search("rnorm")

??rnorm

To see all available demo packages

demo(package = .packages(all.available = TRUE))

Demo in package 'graphics'

demo(package ='graphics')

demo(graphics)

Vignette

Getting package information in pdf format without internet

vignette()

vignette(package=.packages(all.available = TRUE))

vignette('parallel')

vignette('parallel', package='parallel')

Web Search

http://search.r-project.org

RSiteSearch("arithmetic mean")

To install a package

install.packages("sos")

library(sos)

Find function in R site

findFn("{arithmetic mean}")

findFn("{arithmetic mean}",maxPages=2)

Thank you for learning R with me.

Tuesday, June 5, 2018

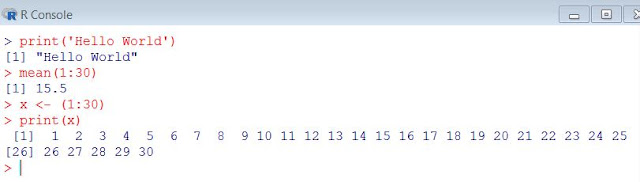

R Programming Fundamental - Day 1

Learning R - Day 1

Download Rgui from https://cran.r-project.org/

Call mean function

mean (1:30)

Print Hello world

Print ('Hello World')

Assign values to a variable

Editors for R (Small projects)

Windows : R-Gui

Mac : R.app

Linux : No specific

Other editors

Emac

Vim-R

NPPToR

IDE for R (Bigger projects)

Eclipse

used by java developer

StatET plugin

Architect

R oriented variant of Eclipse

Revolution-R

Enterprise edition

Live-R

R in web application

RStudio * (Free)

R oriented IDE

RStudio Installation

- go to https://www.rstudio.com

- download Rstudio Desktop and install

RStudio Desktop Overview

To change appearance , Tools -> Global Option -> Appearance

RStudio Doc

https://support.rstudio.com/hc/en-us/categories/200035113-Documentation?version=1.1.453&mode=desktop

Packages window

# is used for Commenting

Ctl +Enter to execute R Script

Example R script

To execute , click on Run or Ctl+Enter

Credits go to https://app.pluralsight.com/library/courses/r-programming-fundamentals

Thursday, February 8, 2018

AlwaysOn Availability group Auto Seeding

Hi This is my first blog in 2018. I am writing about Alwayson availability group auto seeding feature in SQL server 2016.

Step 3

SQLHAPOCR01 (Primary Replica)

SQLHAPOCR02(Secondary Replica)

I have already enabled alwayson availability group feature in both SQL servers.

There are a few preparation steps to do before creating Availability group with auto seeding.

Turn on 9567 trace on Primary replica to enable compression backup.

DBCC TRACEON (9567,-1);

GO

Create Extended Events session monitor

CREATE EVENT SESSION [AlwaysOn_autoseed] ON SERVER

ADD EVENT sqlserver.hadr_automatic_seeding_state_transition,

ADD EVENT sqlserver.hadr_automatic_seeding_timeout,

ADD EVENT sqlserver.hadr_db_manager_seeding_request_msg,

ADD EVENT sqlserver.hadr_physical_seeding_backup_state_change,

ADD EVENT sqlserver.hadr_physical_seeding_failure,

ADD EVENT sqlserver.hadr_physical_seeding_forwarder_state_change,

ADD EVENT sqlserver.hadr_physical_seeding_forwarder_target_state_change,

ADD EVENT sqlserver.hadr_physical_seeding_progress,

ADD EVENT sqlserver.hadr_physical_seeding_restore_state_change,

ADD EVENT sqlserver.hadr_physical_seeding_submit_callback

ADD TARGET package0.event_file(SET filename=N'C:\autoseed.xel',max_file_size=(5),max_rollover_files=(4))

WITH (MAX_MEMORY=4096 KB,EVENT_RETENTION_MODE=ALLOW_SINGLE_EVENT_LOSS,MAX_DISPATCH_LATENCY=30 SECONDS,MAX_EVENT_SIZE=0 KB,MEMORY_PARTITION_MODE=NONE,TRACK_CAUSALITY=OFF,STARTUP_STATE=ON)

GO

ALTER EVENT SESSION AlwaysOn_autoseed ON SERVER STATE=START

GO

After above steps are done, we will follow the steps below.

Step 1

Create availability group with Auto Seeding is automatic. Run this on Primary Replica. Turn on SQLCMD

:Connect SQLHAPOCR01

CREATE AVAILABILITY GROUP SQLHAPOCDR

FOR

REPLICA ON N'SQLHAPOCR01' WITH (ENDPOINT_URL = N'TCP://SQLHAPOCR01.TEST.US.DOM:5022', FAILOVER_MODE = AUTOMATIC, AVAILABILITY_MODE = SYNCHRONOUS_COMMIT, SEEDING_MODE=AUTOMATIC),

N'SQLHAPOCR02' WITH (ENDPOINT_URL = N'TCP://SQLHAPOCR02.TEST.US.DOM:5022', FAILOVER_MODE = AUTOMATIC, AVAILABILITY_MODE = SYNCHRONOUS_COMMIT, SEEDING_MODE=AUTOMATIC);

Go

Step 2

Connect to a Secondary replica and join Secondary replica to AG. Give Create database permission to AG group. Run this on Secondary Replica. Turn on SQLCMD

:Connect SQLHAPOCR02

ALTER AVAILABILITY GROUP SQLHAPOCDR JOIN

GO

:Connect SQLHAPOCR02

ALTER AVAILABILITY GROUP SQLHAPOCDR

GRANT CREATE ANY DATABASE

GO

Step 3

Join an existing database on Primary to AG group. Run on Primary. Turn on SQLCMD

:Connect SQLHAPOCR01

alter AVAILABILITY group SQLHAPOCDR add database TESTAUTOSEED

go

Step 4

I will monitor auto seeding status using the following DMVs and queries.

select * from sys.dm_hadr_automatic_seeding

select * from sys.dm_hadr_physical_seeding_stats

SELECT start_time,

completion_time,

is_source,

current_state,

failure_state,

failure_state_desc,

error_code

FROM sys.dm_hadr_automatic_seeding

My test database is 100 Gbs and it took about 9 mins to finish auto seeding and synchronize.

Thank you for reading my blog.

Subscribe to:

Comments (Atom)

How to add a Database to AlwaysOn Availability Group with four different options

To add a database to an existing AlwaysOn availability group, MS has given us four options to choose from Automatic seeding Full database an...

-

Tempdb filled up last night and databases availability group are not accessible in secondary replica. After restarting a secondary SQL insta...

-

After changing a service account running SQL server, I get an error when I connect to SQL server from Management Studio from my laptop or ot...

-

The cluster core resource was in a failed state after the witness went down several times. The witness came back online but the cluster reso...